Researchers in the Autonomous Systems and Biomechatronics Lab (ASBLab) have been developing assistive robots for over 15 years to help older adults perform activities of daily living (ADL) and maintain wellbeing and health to promote aging-in-place. Second-nature tasks such as dressing, eating and caring for ourselves can become more challenging as we age. New technology from the ASBLab is helping manage and restore independence through increased engagement by socially assistive robots.

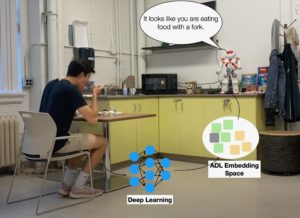

An open challenge has been a robot’s limited autonomy in assisting with a wide range of ADLs. This then requires additional human assistance in initiating interactions between an older user and the robot when the goal is to have the robot help independently. Professor Goldie Nejat, who holds the Canada Research Chair in Robots for Society, and her ASBLab have developed a novel multi-modal deep learning human activity recognition and classification architecture for socially assistive robots that is capable of autonomously identifying and monitoring ADLs to provide further assistance to older adults. They have also incorporated embedded and wearable sensors to create more intuitive human-robot interactions and create more opportunities for older adults to age-in-place in their homes.

“Our aging population is expected to reach 2.1 billion by 2050,” says Nejat, who is also part of U of T’s Robotics Institute. “We are exploring new ways of integrating technologies to assist older adults and those living with cognitive impairments by increasing the perceptions and behaviours for socially assistive robots to provide individualized person-centred care.”

By adding wearable sensors to clothing and using multi-modal inputs to track ADLs, ASBLab researchers are enabling robots to learn from their environment and be more responsive to a user’s changing needs. Assistive behaviours for dressing, eating and even exercising have been tailored to address any changes that happen during interactions.

“Wearable sensors were designed into clothing to give our socially assistive robot Leia more intuitive prompts when dressing,” says Fraser Robinson, ASBLab researcher and MASc candidate in the Department of Mechanical & Industrial Engineering. “Alerts from the sensors inform Leia if a user has put a shirt on inside-out, or has become distracted during dressing. These alerts enable Leia to intelligently guide the user through the next best step.”

Robinson is collaborating with fellow MASc student Zinan Cen (MIE) from both the ASBLab and the Toronto Smart Materials and Structures Lab (TSMART). This research, funded by both AGE-WELL Inc. and NSERC, demonstrates the value of adding wearable sensors to clothing in order to develop further autonomy and intelligence in socially assistive robots.

-Published November 27, 2023 by Kendra Hunter